Weekly ML for SWEs #56: Avoiding brain rot is the key to success

An AI reading list curated to make you a better engineer: 6-24-25

Hello all and welcome to Machine Learning for Software Engineers! Each week I share a thought and dump some resources and interesting articles/videos to help you become a better engineer. If you want this in your inbox each week, make sure to subscribe. You’ll also get more detailed articles including case studies, explanations, build tutorials, job market analyses, and more!

We’re very close to bestseller status! ML for SWEs will be $3/mo until we hit and then it goes up to $5/mo. If you want to get ALL content for each, become a paid subscriber now to save.

Usually I use this article to share something valuable we learned about AI and/or software engineering from the past week, but this week I want to talk about something even more important and impactful. I want to talk to you about your time.

This is something I’ve been thinking about a lot recently. I have 5 young kids, a full-time job, and I write these articles. That’s what I find takes up most of my time.

On top of that I’d like to spend more time cooking dinner for my family (my wife pulls the weight there), spend more time with my kids, spend more intentional time with my wife (i.e. plan things to do), get back into swimming (preferably join a team), and clear my backlog of Steam games and books I want to read.

I want to do a lot. Most of you reading this probably feel the same way otherwise you wouldn’t spending your free time learning machine learning on top of the software engineering you already know.

I’ve been thinking a lot about how much time I can actually spend on each if I want to do them all and whether or not it’s even possible. Because I’m a nerd, I brought it back to numbers.

We’ve each got 168 hours in a week. Work takes up 40 of those. Sleep should take up 56 (at least). Let’s say exercise is 10 hours. Let’s devote about 25 hours to taking care of my house and/or focused on my kids. Then let’s take the weekend “work time” and spend that in the same way: 18 more hours. I’d also think about 14 hours should be set aside each week to “do nothing”. These are hours to relax to ensure the rest of your hours are productive.

Where does that leave me? With 5 hours left for all the items on my list if I don’t sacrifice anywhere else. So the answer? No, doing everything isn’t possible. But this exercise put into perspective how valuable each hour I have is and made me think deeper about how I’m spending them.

This tweet hit home yesterday and made me come back to this exercise. 👇

Naturally, after examining how I spend my time, I turned to Screen Time on my phone and realized just how much time I had been spending on YouTube Shorts. It wasn’t ridiculous by any means, but I’ve never finished scrolling YouTube Shorts and thought, “That was a good use of my time.”

So I resolved to fix this and spend more time on things I actually want to do. Things that I can look back on and think, “That was a good use of my time.”

My challenge for you is to track how you spend your time and do a retrospective. Think about what you’re doing with your time and then think about what you could be doing with that time. Don’t beat yourself up over not spending time wisely—just use it to gain some perspective.

The biggest thing: Avoid brain rot. I can’t think of a single social media platform without it. It’s okay to spend time on social media and it’s okay to spend time not doing things, but ensure the time you spend isn’t working against you. There’s a resource in the “Other interesting things” section below to help avoid brain rot that I found interesting. 😊

To close, here are a few more thoughts I’ve had recently about social media:

You don’t have to make your work your entire life.

You don’t always have to be “grinding”.

You don’t have to care what strangers think about anything you’re doing.

The opposite of these 3 ideas are perpetuated constantly on social media and they couldn’t be further from the truth. But if you spend so much time reading it, it’s only a matter of time before you start believing it.

I’ve written about social media many times before—especially from the angle of understanding the impact of recommendation algorithms. You can check them out here:

In case you missed ML for SWEs last week week, you can learn the best way to keep up with AI research papers here. Enjoy the resources below and don’t forget to subscribe to join us!

Resources to become a better engineer

This is a callback to last week’s article and I was incredibly grateful for this roundup this week. Limiting my exposure to social media caused me to miss most of these papers. No worries because Elvis had my back.

Less time online was a worthwhile tradeoff for sure, though.

Tensor Manipulation Unit (TMU): Reconfigurable, Near-Memory, High-Throughput AI

This paper introduces the Tensor Manipulation Unit (TMU), a novel AI accelerator that tackles performance bottlenecks by being placed near memory and featuring a reconfigurable hardware fabric. This reconfigurable design allows the TMU to adapt its dataflow to different neural network layers, achieving up to 12.8x higher throughput and 7.4x better energy efficiency compared to state-of-the-art accelerators on models like Transformers.

This paper showed me I have a ton to learn about how TPUs work because I a) didn’t know a tensor manipulation unit could have such an impact and b) can’t fully grasp whether this is a huge deal or not important at all. Definitely worth checking out.

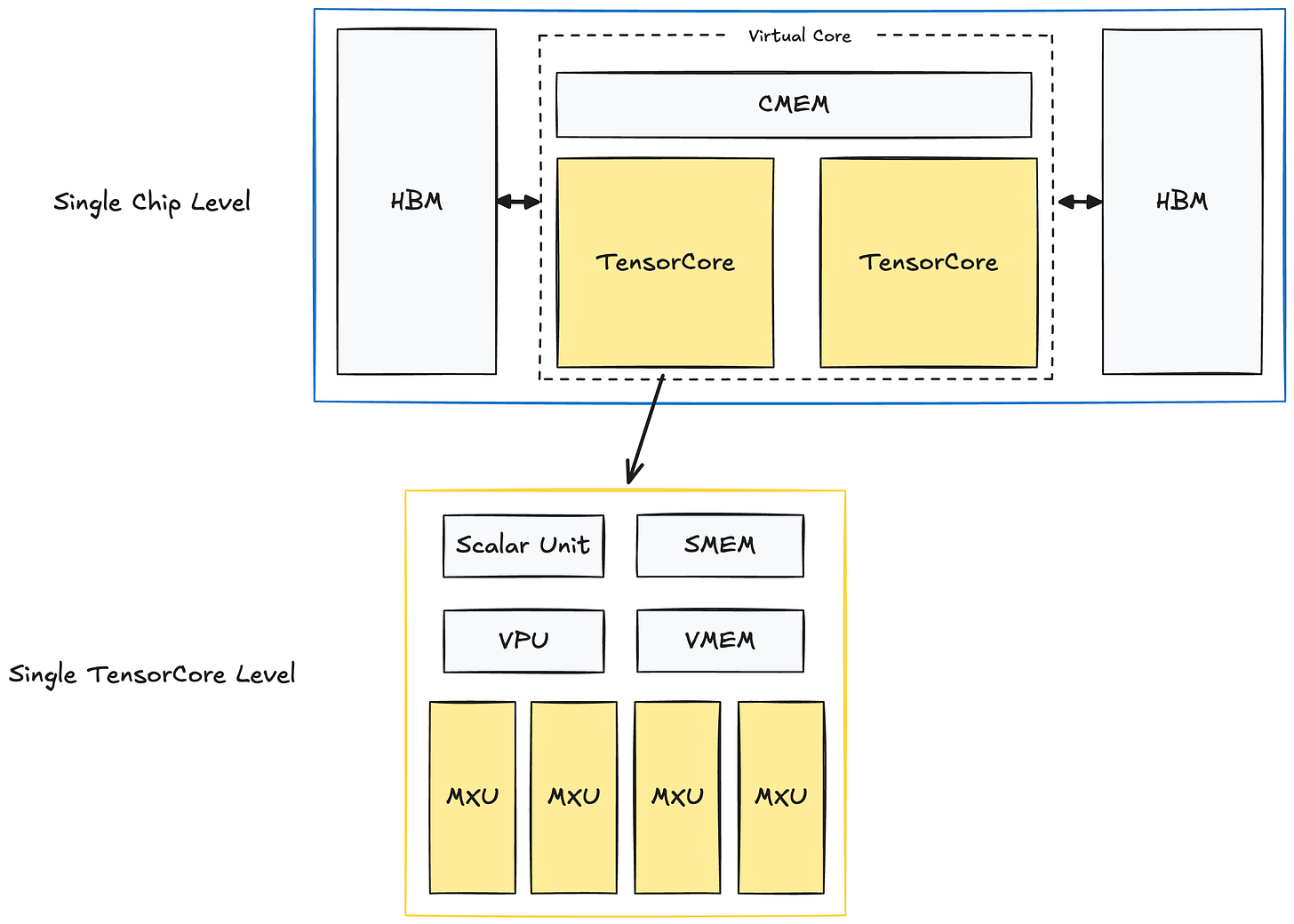

This is an interesting read about Google’s Tensor Processing Units (TPUs) which I largely consider to be Google’s primary advantage in the race toward ‘AGI’ (however we want to define it). My daily work is close to TPUs and they’ve been incredibly valuable for Google.

Google's TPUs are specialized ASICs that accelerate deep learning workloads through a domain-specific architecture centered on a systolic array. This key component enables massive parallel processing, performing thousands of matrix multiply-accumulate operations in a single clock cycle, often using reduced numerical precision (like INT8) for greater speed and efficiency.

Andrej Karpathy: Software Is Changing (Again)

If you’re a frequent ML for SWEs reader, this information might not be new to you as we’ve discussed it many times, but Andrej Karpathy explains the shift AI is creating in software development. It’s worth a watch even if just a review.

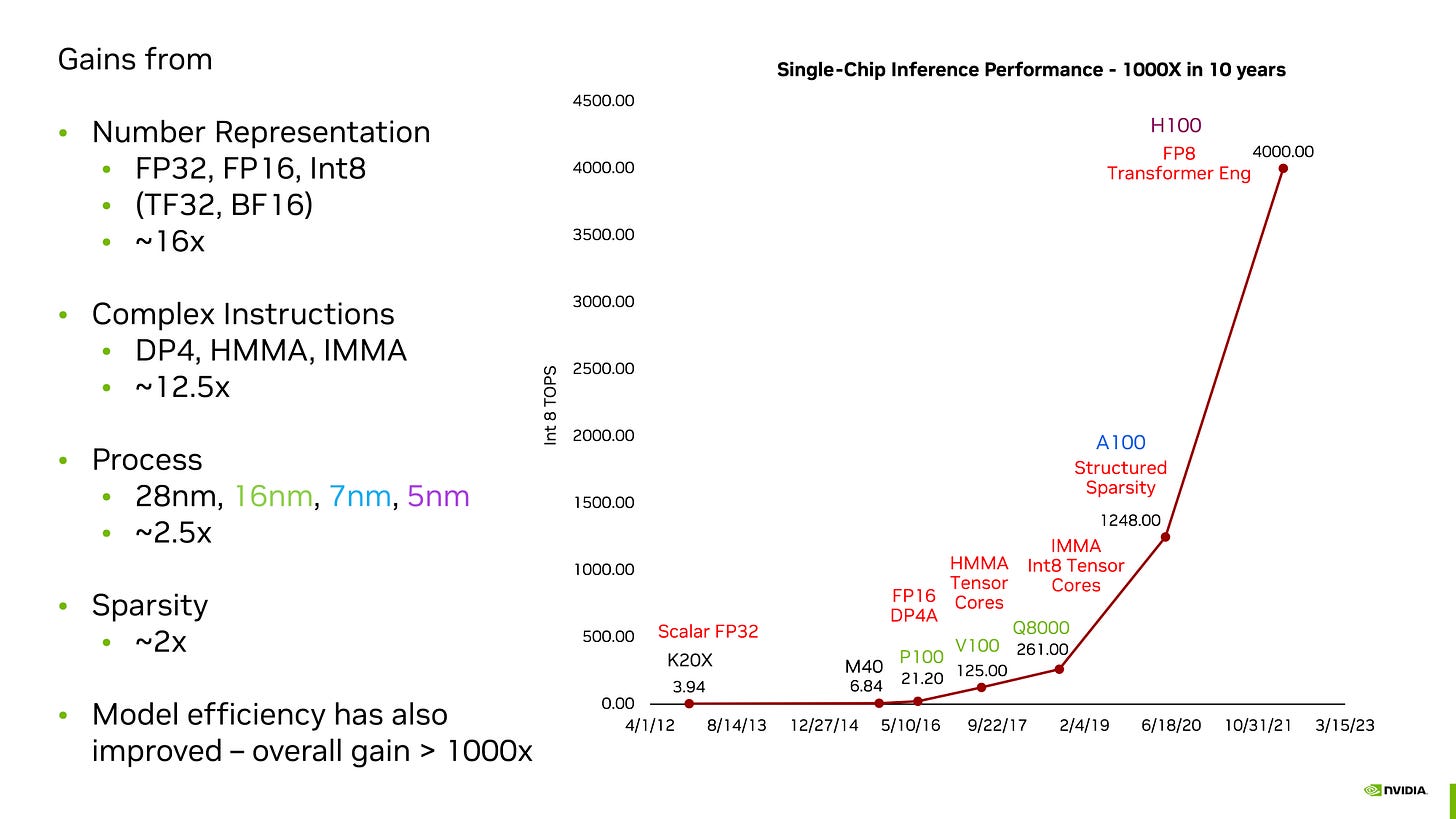

Huang's Law by

The biggest takeaway from this is that scaling hasn’t hit a wall.

Huang's Law, as described in the article, is an observation by Nvidia CEO Jensen Huang stating that GPU performance for AI applications roughly doubles every year, driven by combined hardware and software advancements. This rapid, annual doubling significantly outpaces the traditional Moore's Law rate and is considered a key technical driver behind the acceleration of AI capabilities and the AI boom.

Other interesting things

Using Home Assistant, adguard home and an $8 smart outlet to avoid brain rot

A tweet about an article comparing learning to code to be as bad of advice as getting a face tattoo. Now is a great time to get into computer science, but my advice remains the same as it always has: Do it because you’re interested in it not for the money.

Long-anticipated Tesla robotaxi service has launched in Austin. Big news and a seriously competitor for Waymo. More competition is always better for consumers.

The lifeblood of the United State’s advantage is high-skilled immigration (yours truly). Without it, the US’s competitive advantage is reduced significantly.

Steve Jobs’ take on the meaning of life: Make something wonderful and put it out there. Check out the video below.

Thanks again for reading!

Always be (machine) learning,

Logan