Welcome to machine learning for software engineers. Each week, I share:

A lesson in AI from the past week

Some resources to help you become a better engineer

The interesting things that happened this past week

A short snippet about the job market including open positions.

Essentially, all the AI content software engineers should be aware of each week. Subscribe to get these directly in your inbox along with my topical articles focusing on specific machine learning engineering topics.

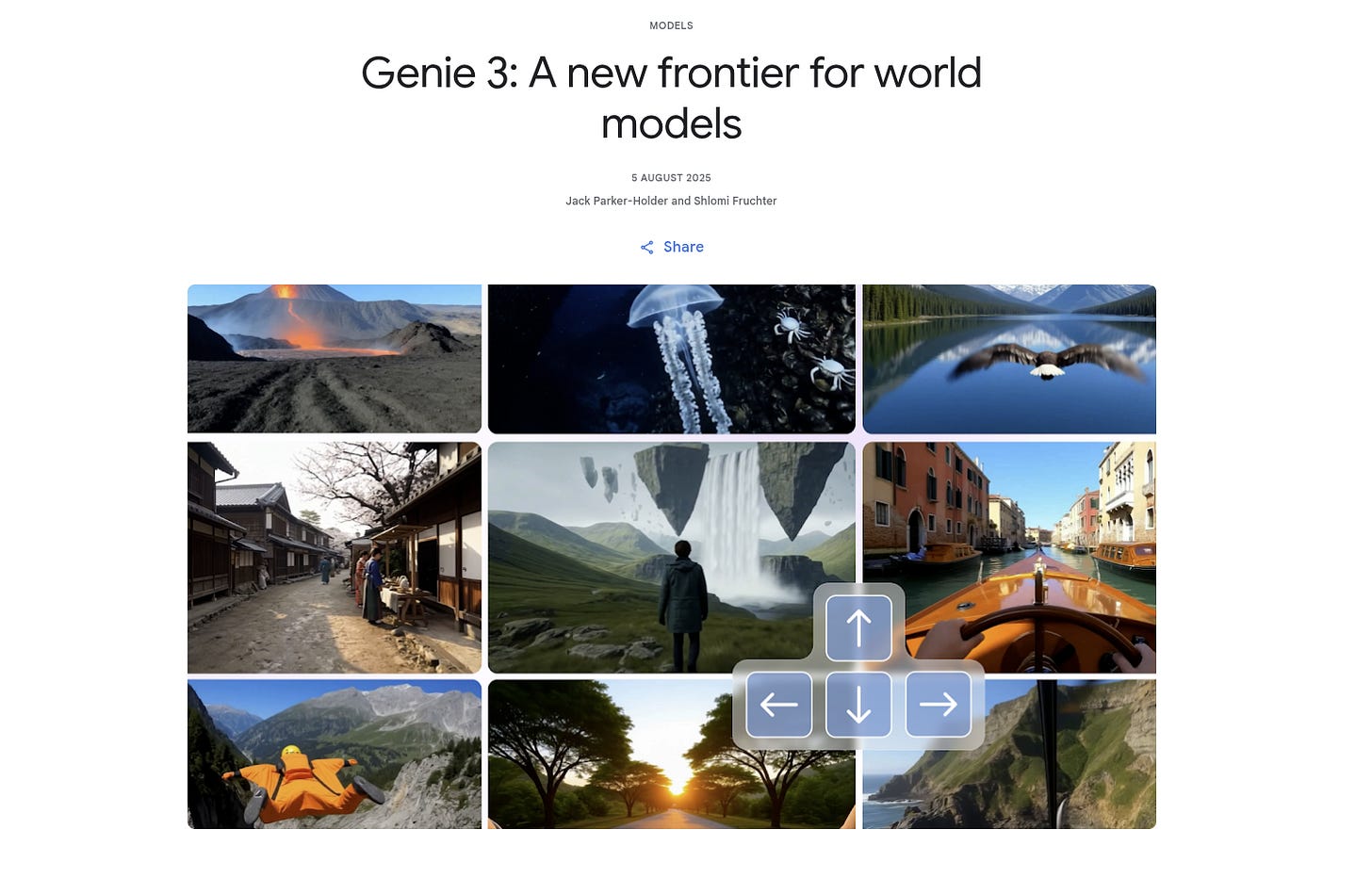

Google DeepMind released Genie 3 this past week. It's a world model that can simulate interaction environments with a single prompt.

World models are the breakthrough AI agents need to become useful and adapt to new situations quickly. I've heard a lot of complaints recently about the usefulness and usability of AI agents being overstated by AI companies and world models solve a key limitation to enable further use cases.

World models like Genie 3 are a breakthrough because they are "make it possible to train AI agents in an unlimited curriculum of rich simulation environments." This means we can procedurally generate the environments we need to train AI agents on a variety of tasks.

One of the huge limitations of AI agents currently is they need the data to train on a scenario to be useful in that scenario. Examples of this include self-driving cars, robotics, or digital games. This limitation is applicable to any physical AI that needs to interact with an environment.

This is an area we're critically lacking in data and a big reason why the agents we see deployed today can be considered "glorified chat bots". We have the language data to create the chat bots. We lack the data for other types of agents.

Genie 3 works by receiving a text description from the user describing a scene. It then creates a 720p environment running at 24 frames per second that can be navigated and interacted with. The model maintains visual consistency for several minutes and remembers what happened up to a minute ago.

World models enable:

Generating training scenarios by describing them.

Testing systems in conditions that would be expensive or dangerous to create in reality.

Creating variations of scenarios quickly without building new simulations.

Training agents on edge cases and rare events that are hard to capture in real data.

Simulating complex multi-agent interactions without coordinating real participants.

Prototyping and validating ideas before investing in expensive real-world testing.

A good example of this usability is autonomous vehicle testing. Instead of driving millions of miles or building complex simulations, thousands of scenarios can be generated: heavy rain at night, construction zones, emergency vehicles, pedestrians in different lighting conditions.

World models are still in their early research days and have key limitations:

Generated scenarios may not accurately reflect real-world physics and constraints.

Models can hallucinate impossible or inconsistent behaviors.

Quality degrades significantly for scenarios outside the (world model) training distribution.

Computational requirements are enormous for high-fidelity simulations.

Current models struggle with long-term consistency and cause-effect relationships.

Limited ability to model complex systems with many interacting components.

Genie 3 is currently only available to a small group of researchers, but the proven concept represents a shift in how we can think about AI training infrastructure. World models are positioning themselves as foundational technology similar to how transformers became the backbone of language models.

On a side note, they also enable automated video game and movie generation. Think of movie and game experiences personalized for each individual and generated in real-time. Theoretically, a game or movie a person enjoys could never end. The sequel could just be generated.

This brings me to a question I have for you: Do you think fully AI-generated feature films or AAA games will come first? Let me know in the comments.

If you missed last week's ML for SWEs, you can catch it here:

We learned about the importance of capturing feedback directly from users and the impact iterating quickly has. Check it out and enjoy the resources below!

Must-reads

1. OpenAI releases open models

OpenAI just dropped their first open models—GPT-OSS-120B for datacenters and GPT-OSS-20B for local machines. Reviews so far seem positive. This is a big step in open source and shows OpenAI was serious about their promise.

2. My 2.5-year-old laptop can write Space Invaders

Simon Willison demonstrates his MacBook Pro M2 running GLM-4.5 Air locally via MLX generating a complete, working Space Invaders game in HTML and JavaScript on the first try. Peak memory usage was around 48GB on consumer hardware.

3. Three challenges in machine-based reasoning

Amazon's research team breaks down the core problems in automated reasoning: translating natural to structured language, defining truth, and achieving definitive reasoning. If you're building AI systems, understanding these fundamental challenges is important.

4. I know when you're vibe coding

AI-generated code is easy to spot because it frequently doesn't follow project conventions. Learn to spot redundant implementations, inappropriate architectural choices, and other telltale signs that code was generated for speed over quality.

5. Gemini Embedding model

Google's Gemini Embedding text model is now generally available for building advanced AI applications. The model achieved over 81% correct answers in evaluations and showed a 3.6% recall increase, making it useful for RAG and context engineering applications.

Other interesting things this week

AI Developments:

Google's Genie 3: New world model generates interactive 720p environments at 24fps from text prompts. Supports real-time user navigation and dynamic world modification. Researchers call it a "stepping stone on the path to AGI" for training AI agents.

OpenAI introduces Stargate Norway - Details about this announcement remain limited.

Qwen-Image: 20B parameter model excelling at complex text rendering in multiple languages with precise image editing capabilities.

Google DeepMind's Game Arena: New open-source platform for rigorous AI model evaluation through strategic games.

AlphaEarth Foundations: AI model integrating petabytes of Earth observation data, available through Google Earth Engine.

Zuckerberg outlines Meta's AI vision for "personal superintelligence" as closer than expected.

Product Launches:

OpenAI launched "Study Mode" for college students, positioning ChatGPT as an always-available AI tutor despite concerns about misinformation.

KathaaVerse transforms books and movies into interactive text adventures, letting users direct plots in Harry Potter, Lord of the Rings, and more.

Kitten TTS: Ultra-lightweight 25MB text-to-speech model with 8 voices that runs entirely on CPU without GPU requirements. Open-source model enables privacy-focused edge AI applications on low-power devices like Raspberry Pi.

Ollama released new desktop apps for macOS and Windows with file drag-and-drop for PDFs, images, and code processing.

Research & Analysis:

Content-aware spaced repetition systems that understand flashcard semantic meaning, not just review patterns.

Only one-third of Americans have used AI for work - AP-NORC poll shows 37% use AI for work tasks while 60% use it for information searches, with younger demographics showing significantly higher adoption rates.

Technical Tools:

Gemini Embedding model generally available for RAG applications, achieving 81% accuracy with 3.6% recall improvement.

Don't Ban AI. Redesign Instruction - Schools increasingly block frontier AI models while providing educational AI platforms, but assessment methods remain largely unchanged.

How to Protect your AI by using Guardrails - AI failures occur quietly by scaling bad assumptions; teams should optimize for control before capability to prevent degrading trust and burning out teams.

Vibe code is legacy code - AI-generated code that nobody understands becomes technical debt immediately, suitable for prototypes but creating maintenance nightmares in production.

Security & Concerns:

Gemini CLI vulnerability allowed data exfiltration within 48 hours of release through exploits targeting the tool's default configuration.

New Yorker explores potential consequences of AI solving loneliness and reshaping human companionship.

Industry Analysis:

Interviewing Ross Taylor on the state of AI - Candid insights on the relentless pace of Chinese open models, organizational failures in major LLM efforts being "politics-bound" rather than talent-bound, and why rubrics are driving the next wave of AI applications.

Infrastructure & Energy:

Wyoming's new AI data center will consume more electricity than all state residents combined, potentially scaling to 10 gigawatts—double the state's total generation capacity.

This week's jobs

Three trends this week:

ML engineering positions are rampant.

Remote opportunities actually seem to be increasing.

New grad/junior AI positions are popping up much more frequently. I'm very surprised by this one, but it makes sense. There's far more demand than supply in AI so companies will find more candidates by teaching them instead of hiring them.

Graduate Opportunities:

TikTok - Multiple ML Engineer Graduate positions (San Jose, Seattle) focusing on e-commerce search, recommendation systems, and multimodal matching for video content

Quora - Remote ML Engineer New Grad working on Poe's cutting-edge LLM applications with end-to-end ownership

Nuro - AI Platform Software Engineer New Grad in Mountain View

Experienced Roles:

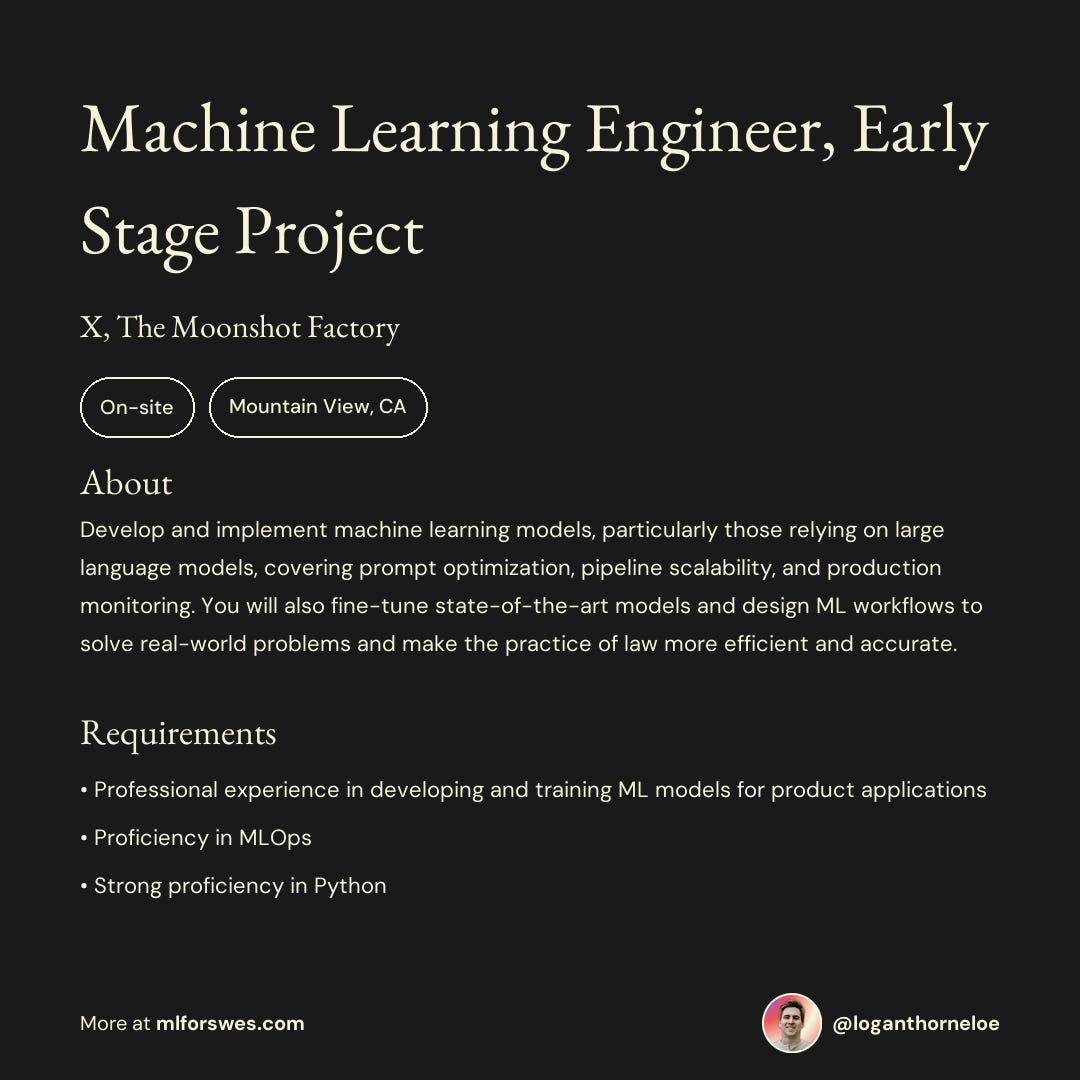

X, The Moonshot Factory - ML Engineer for early-stage moonshot projects in Mountain View

Netflix - L5 ML Engineer for Studio Media Algorithms

Notion - Software Engineer, Machine Learning in San Francisco

Yahoo - ML Engineer (Remote)

Cisco - Remote ML Engineer focusing on AI/ML models for threat detection and security analysis

That's all for this week.

If you found this helpful, consider supporting ML for SWEs by becoming a paid subscriber. You'll get even more jobs, resources, and interesting articles plus a monthly more in-depth AI job market article.

Always be (machine) learning,

Logan

Hi Logan, I was wondering if you would be interested in participating in our research about the future of AI in Creative Industries? Would be really keen to hear your perspectives. It only takes 10mins and I am sure you will find it interesting.

https://form.typeform.com/to/EZlPfCGm

I like how this series complements what we do over at The Palindrome. It focuses on the latest trends, news, models, etc... while we write about the fundamentals that will still hold true in 100 years.

Keep it going, Logan.