This week’s roundup kicks off with the most important story in AI: OpenAI has completed its long-awaited restructuring. It is now a $500 billion for-profit Public Benefit Corporation (PBC), officially shedding the “capped-profit” model that defined it for years.

To understand what this means, here’s the 10-year evolution of its business model:

2015: The Non-Profit - OpenAI launches as a 501(c)(3) non-profit research lab. As a 501(c)(3), its financial structure was based on donations, not investments. It was legally barred from making profits for private owners or issuing stock and was instead 100% focused on its mission to “benefit all of humanity.”

2019: The “Capped-Profit” Hybrid - To fund its massive compute needs, it creates a for-profit subsidiary (OpenAI LP). This was a radical change, done because donations couldn’t pay the massive compute bills. This new structure allowed OpenAI to take on venture capital (like from Microsoft) and issue equity, but to preserve the mission, it “capped” the financial returns for all investors.

2025: The For-Profit Giant - The “capped-profit” model is gone. The company is now the OpenAI Group PBC, with the non-profit parent renamed the OpenAI Foundation. This new structure removes the profit cap entirely, turning OpenAI into a conventional for-profit corporation. It can now raise unlimited capital and, crucially, offer uncapped stock to compete for talent with Google, Anthropic, Meta, and others.

This new structure is complex, but here are the three essential takeaways you need to know:

The “Capped-Profit” model is done. The old financial structure prohibited them from competing. You can’t attract the world’s best engineers and investors with a capped profit model. This move was a necessary decision to survive.

There’s concern the non-profit parent company may just be a formality. On paper, the non-profit “OpenAI Foundation” still controls the for-profit company. In reality, we saw this structure fail in 2023 when the old, safety-focused board tried to oust Sam Altman. It worked for 48 hours until Altman came back and the board was fired.

The public is pissed. OpenAI built its foundational models by scraping data under the banner of a non-profit mission to “benefit all of humanity.” Now, all that publicly-sourced work has been converted into the core asset of a $500 billion for-profit corporation. AI is a pivotal technology that will impact everyone. It was much more comforting to think of it being controlled by a non-profit entity rather than a for-profit corporation.

I haven’t had an update in two weeks. I’ve been taking a short break to navigate some things in my personal life, but I am back.

In case you missed the last issue of Machine Learning for Software Engineers, we discussed how top AI research labs will monetize. You can find it here:

Must Reads

PPO for LLMs: A Guide for Normal People by

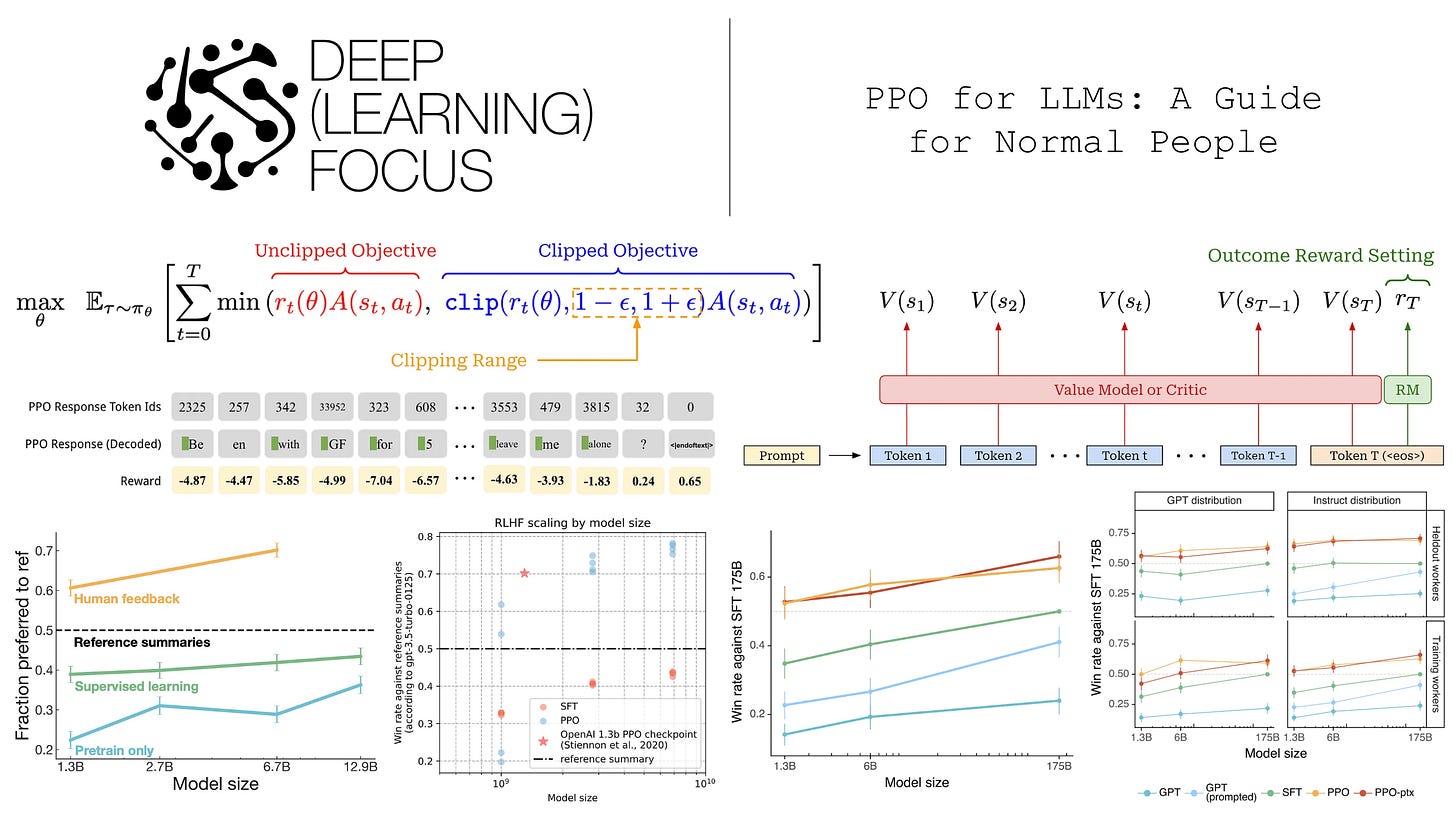

- PPO (Proximal Policy Optimization) is the primary RL algorithm used to align LLMs to human preferences: it optimizes a policy via policy-gradient updates using the per-token advantage A(s_t,a_t) (advantage = cumulative trajectory reward minus critic value), enforces a trust region via KL penalties to a reference/SFT model, and alternates between sampling completions and performing several optimization epochs on that sampled data.

Which Deep Research Web App should you be using? by

- The author’s lab tested major “Deep Research” web chatbots across real-world tasks (AI, law, finance, fact-checking, code generation, forecasting) and ranks the top 5 based on real-use interface performance (not API benchmarks). Full detailed results and methodology are behind the author’s paid subscription.

The Machine Learning Practitioner’s Guide to Fine-Tuning Language Models - Fine-tuning should be a last resort after prompt engineering and RAG. Use parameter-efficient methods (LoRA/QLoRA/Spectrum) to update ~0.1%–3% of parameters, enabling 70B+ models on consumer GPUs with zero inference latency. Typical learning rates are ~1e-4–2e-4 with 100–1,000 examples for small specialization or 1,000–100,000 for full fine-tuning.

7 Must-Know Agentic AI Design Patterns - Choose the pattern that matches task complexity: use ReAct (+tools) for investigative or iterative tasks, Reflection for self-correcting outputs, Planning for complex multi-step work, Multi-Agent when single agents hit capability limits, Sequential for fixed pipelines, and Human-in-the-Loop for high-risk decisions. Start with single-agent + tools and move to multi-agent only when specialization measurably improves outcomes.

DeepSeek drops open-source model that compresses text 10x through images - DeepSeek released DeepSeek-OCR (open-source weights/code), a model that compresses text into visual tokens up to ~10× (e.g., 700–800 text tokens → 100 vision tokens with 97.3% OCR accuracy), enabling potential context windows an order of magnitude larger and allowing one A100-40G GPU to process >200,000 pages/day.

Product & Industry

OpenAI completed its restructuring into a public benefit corporation with Microsoft holding 27% at $135 billion valuation. The new Microsoft partnership extends IP rights through 2032 and requires independent verification if OpenAI declares AGI.

Adobe launched AI assistants for Express and Photoshop that understand layers, auto-select objects, and automate repetitive edits. Photoshop now supports third-party models like Google Gemini 2.5 and FLUX.1 for generative fill.

Google AI Studio lets anyone describe an app and have Gemini generate a working React/TypeScript app in minutes. OpenAI shipped ChatGPT Atlas, a browser with built-in AI assistance.

MiniMax-M2 is the top open-weight LLM for agentic tool use, scoring 61 on Artificial Analysis’ Intelligence Index while running with 10B active parameters out of 230B total via an MoE architecture.

Anthropic expanded Claude for Financial Services with a beta Claude for Excel sidebar and new real-time data connectors from LSEG, Moody’s, and others. Claude 4.5 tops the Vals AI Finance Agent benchmark at 55.3%.

Research

DeepSeek released DeepSeek-OCR, an open-source model that compresses text into visual tokens at 10x density with 97% accuracy. One A100 GPU can process over 200,000 pages per day, potentially enabling context windows an order of magnitude larger.

Google’s Willow quantum chip demonstrated verifiable quantum advantage on hardware, running a Quantum Echoes algorithm 13,000× faster than the best classical algorithm on a top supercomputer.

Hugging Face and Meta launched OpenEnv Hub to standardize agentic environments with sandboxed packages that define tools, APIs, and execution context for training and deploying agents.

Netflix announced it’s “all in” on generative AI as a tool to boost creative efficiency, citing early uses like a CGI building collapse in The Eternaut and other VFX work.

Learning & Code

PPO for LLMs: A Guide for Normal People explains how Proximal Policy Optimization works to align LLMs to human preferences through policy-gradient updates, KL penalties, and alternating between sampling and optimization epochs.

The Machine Learning Practitioner’s Guide to Fine-Tuning explains parameter-efficient methods like LoRA that enable 70B models on consumer GPUs with zero inference latency. Fine-tuning should be a last resort after prompt engineering and RAG.

7 Must-Know Agentic AI Design Patterns covers ReAct, Reflection, Planning, Multi-Agent, Sequential, and Human-in-the-Loop patterns. Start with single-agent + tools and move to multi-agent only when specialization improves outcomes.

NVIDIA launched a Learning Path offering courses, workshops, and certifications for practical deep learning skills.

Google launched Google Skills, a platform with 3,000 courses and AI labs.

Engineering & Tools

Addy Osmani breaks down some tips and tricks for using Gemini CLI for agentic coding.

Context Engineering tips for Google DeepMind Gemini: use append-only context for cache hits, manage tools statically, write context to external storage, have models restate objectives periodically, and keep error messages in context.

Google Cloud launched Vertex AI Training, a managed Slurm service for enterprise-scale model training with automatic checkpointing, job recovery, and access to thousands of GPUs.

Anthropic launched Claude Code for web, an asynchronous hosted coding agent that runs in a sandboxed container with filesystem and network isolation, providing a web and mobile UI for executing coding tasks.

Safety & Ethics

Microsoft’s Mustafa Suleyman said Microsoft will never build sex robots and urged the industry to avoid creating seemingly conscious AI. He argues chatbots should have explicit boundaries to prevent mistaking lifelike behavior for consciousness.

OpenAI’s October update to GPT-5’s default model improved ChatGPT’s handling of mental/emotional distress, working with 170+ mental health experts and reducing responses that fall short of desired behavior by 65-80%.

Several leading AI forecasters and Andrej Karpathy revised their timelines outward from 2027 toward 2029-2032. They cite frontier labs focusing more on consumer products and safety-minded R&D, making rapid 2027-style takeoff less likely.

A developer argues AI is increasing work pressure by creating psychological compulsion to constantly leverage always-on tools. This drives burnout and reduces creativity as rest becomes perceived inefficiency.

Funding

Cartesia raised $100M from Kleiner Perkins to build real-time voice AI.

Mercor raised $350M at $10B valuation for AI talent matching.

Fal.ai raised $250M at $4B+ valuation for multimodal AI infrastructure.

Sesame raised $250M from Sequoia for conversational AI and smart glasses.

LangChain raised $125M at $1.25B valuation led by IVP.

Serval raised $47M from Redpoint for IT service management agents.

Wonder Studios raised $12M from Atomico for AI-driven creative studio.

Cercli raised $12M from Picus Capital for AI-native HR platform.

Shuttle raised $6M for automating deployment of AI-generated apps.

Tensormesh raised $4.5M to commercialize LMCache for inference cost reduction.

Adaption Labs reportedly raised $20-40M for adaptive learning AI.

Turbo AI grew to 5M users and eight-figure ARR as AI notetaking app.

Who’s Hiring

Salary ranges: AI/ML engineering positions at major tech companies range from $168K-$334K (NVIDIA Senior Software Engineer), $154K-$226K (Stitch Fix Senior ML Engineer), and $150K-$200K for new grad roles at Together AI.

Common positions: Senior Software Engineer (ML/AI), Machine Learning Engineer, Computer Vision Engineer, Software Engineer (Systems ML), and Applied Scientist are the most frequently posted roles.

Top companies: NVIDIA, Google, Meta, Microsoft, Pinterest, Affirm, HubSpot, and Together AI have the most active AI/ML job postings this week.

Discussions

Tech companies are shoehorning AI into every product, often degrading core functionality and user experience. Gemini slows basic commands, Copilot forced into Windows, models trained on scraped work without consent while user interactions are harvested to build detailed profiles.

The LLM arms race is forcing researchers into extreme, unsustainable 100-hour workweeks. Rising technical standards require ever more compute, data, tooling, and sustained focused effort, driving widespread burnout. Culture and team cohesion are now as critical as compute or code for long-term success.

Widespread reliance on LLMs is eroding programmers’ deep technical skills and documentation-reading habits. The author warns this risks increased technical debt, greater vulnerability during outages, and eventual job replacement as AI automates more engineering roles.

Several leading AI forecasters and Andrej Karpathy revised their timelines outward from 2027 toward 2029-2032. Frontier labs appear more focused on consumer products and safety-minded R&D, making an R&D-driven rapid takeoff significantly less likely.

AI is increasing work pressure by creating psychological compulsion to constantly leverage always-on tools, driving 996-style hours in some AI startups. This turns rest into perceived inefficiency, raising burnout and reducing creativity.

Other Things

OpenAI offered free ChatGPT Go to India users for 12 months starting November 4. Zoom CEO Eric Yuan said AI will reduce workweeks to three or four days within five years. Amazon launched “Help me decide”, an AI feature that analyzes your history to recommend products and explain why. OpenAI acquired Software Applications Incorporated, makers of Sky, to bring natural-language desktop AI features into ChatGPT. Wikipedia’s traffic fell 8% due to generative AI search summaries. China’s generative AI user base doubled to 515 million in six months. Meta cut about 600 AI jobs from its superintelligence lab. Accounting firms are using AI agents for up to 30% time savings on routine tasks. OpenAI released economic blueprints for South Korea and Japan on AI capability-building.

Thanks for reading!

Always be (machine) learning,

Logan