ML for SWEs Weekly #53: A New Way for Software Engineers to Learn ML Math

An AI reading list curated for software engineers: 6-2-25

If you find Machine Learning for Software Engineers helpful, consider becoming a paid subscriber. It helps me keep my article ad- and sponsor-free so I can keep writing about what’s most important for you! Some things you’ll get include:

ML case studies

More resources to become a better engineer

Hands-on ML tutorials

Career enhancement articles

And more! It’s only $3/mo for the next 20 people and will be increasing to $5/mo afterward. Lock in 40% off forever:

Note: Check if you can get this reimbursed through your work. Many companies have a professional development budget they’re happy to use.

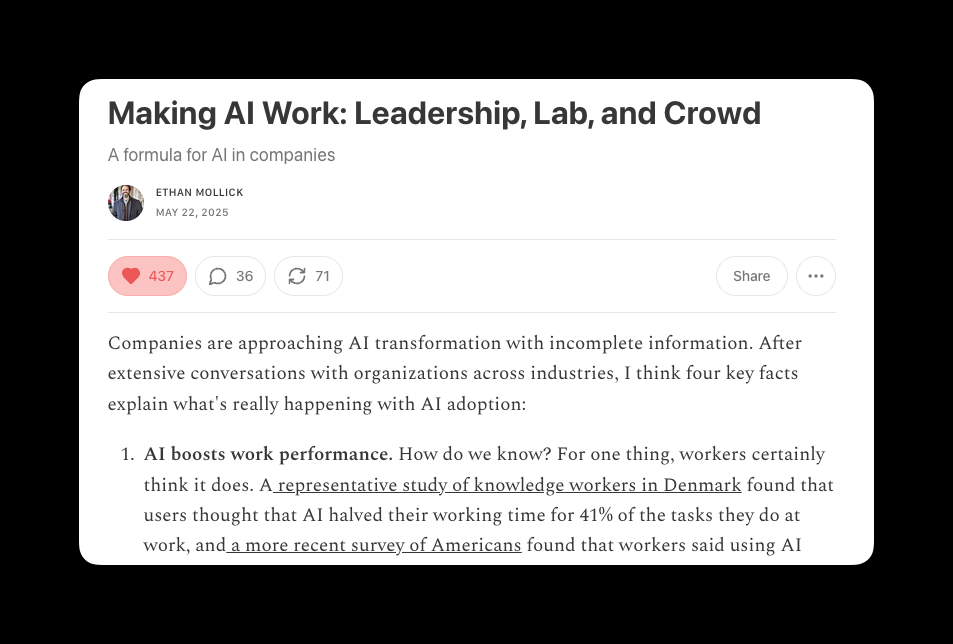

How to make AI work in practice

This is a very interesting read about how to make AI actually realize gains for those using it. We know it can do things, we know people use it, but how come we don’t see such an impact from it? As engineers building AI, this is what we care about: whether or not our products are actually used and how useful they are.

Interestingly, the first topic discussed is the impact leadership has on worker usage of AI within a company. I found this cool because leadership also has a massive impact on whether or not the AI companies can succeed at delivering value.

In previous articles, I’ve discussed the three pillars of AI that show whether a company will have long-term success: data, talent, and compute. All three must be met for a company to be successful in the long-term. A fourth factor that can destroy a company from within, even if the other three factors are met, is leadership.

If leadership is steering the company in the wrong direction or inhibiting innovation that can come about via the three pillars, the company is doomed to fail.

I’m guessing the same can be said about companies and using AI: If leadership steers them in the wrong direction and fails to identify how AI can be help the company compete, the company will fall to its competitors.

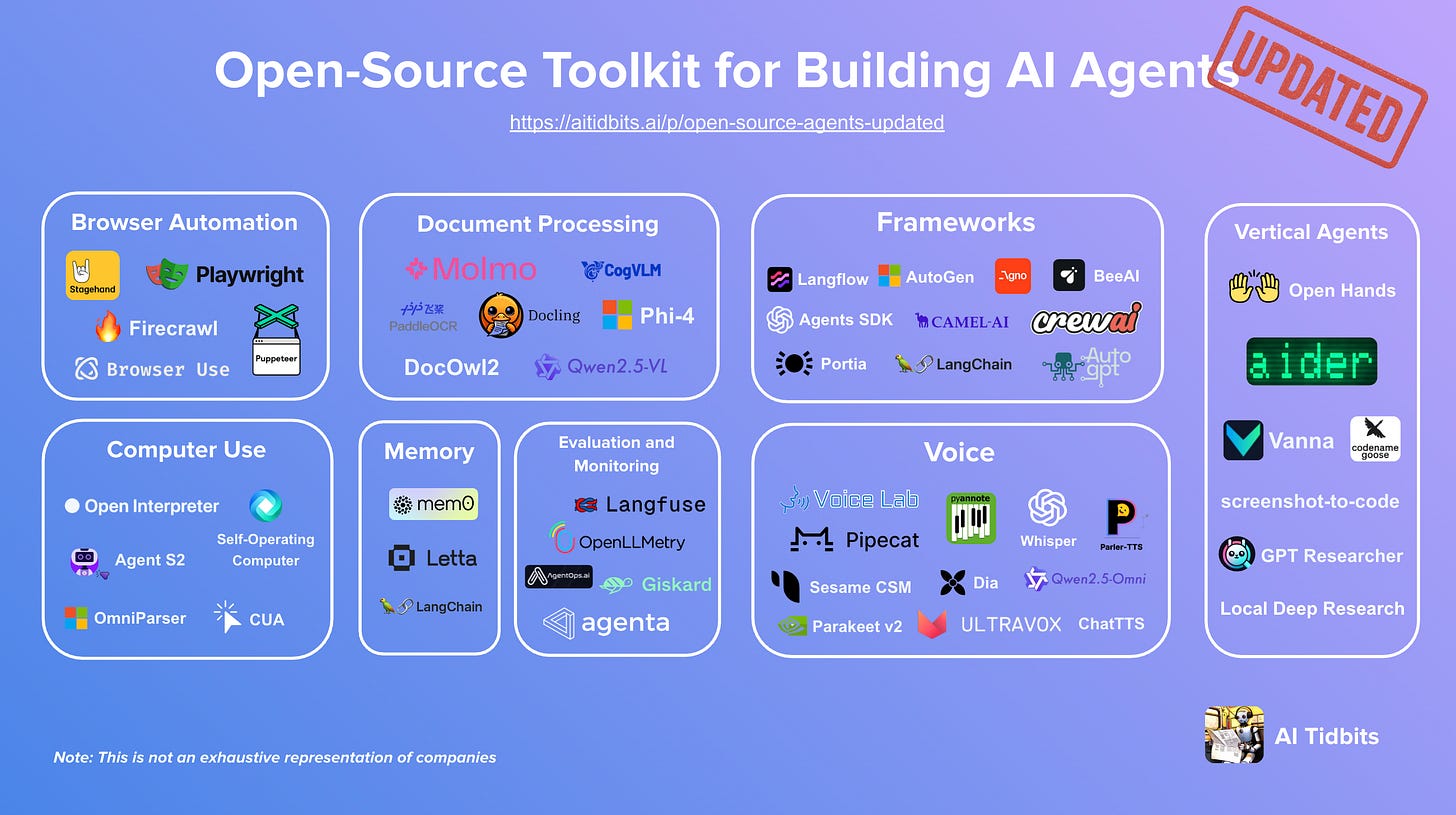

A guide to the open source tools currently used to build AI agents

If you want to know what tools are actually being used to build AI agents,

’s guide on open-source tools for building AI agents is an excellent read. I love articles focused on what people are actually using and putting machine learning into practice.Sahar takes this one step forward by listing only open source tools with licenses that allow for them to be used in commercial applications. Below is an image with a brief overview of all the tools and the categories they’re separated into.

Keeping up with open source tooling and tooling commonly used in practice is something I want to get better at. This truly is the one thing I miss by working at Google. We use our own internal tooling and it’s easy to fall behind in my knowledge of what everyone else is using. I appreciate Sahar’s work here to help me stay ahead.

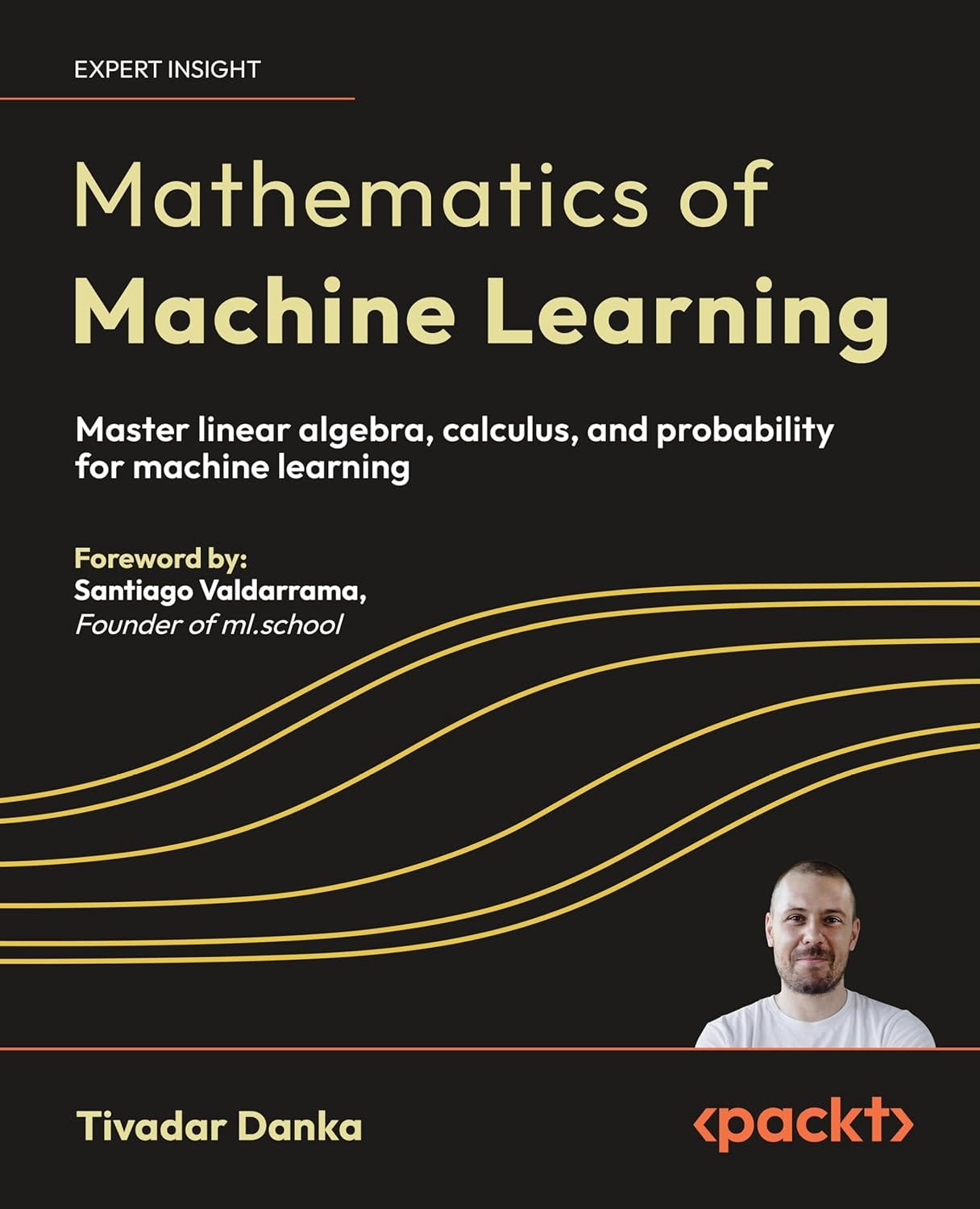

An excellent new way to learn ML math

I want to call out

’s new book The Mathematics of Machine Learning that’s hot off of the presses. This the culmination of four years of hard work and explains all the ML math concepts I tell software engineers to study to become familiar with AI and machine learning.My copy of the book was supposed to arrive today and it didn’t—so I’m a bit upset, but tomorrow will have to do. I’ll be running through this book this month, and if it’s anything similar to

, I will likely recommend it as my resource for anyone wanting to learn ML math (Seeing as they have the same author, I’m certain it will be!).You can pick up a copy for yourself on Amazon or the publisher’s website. If you purchase a hard copy at either location, you can redeem a code for a digital PDF of the book on Packt’s website.

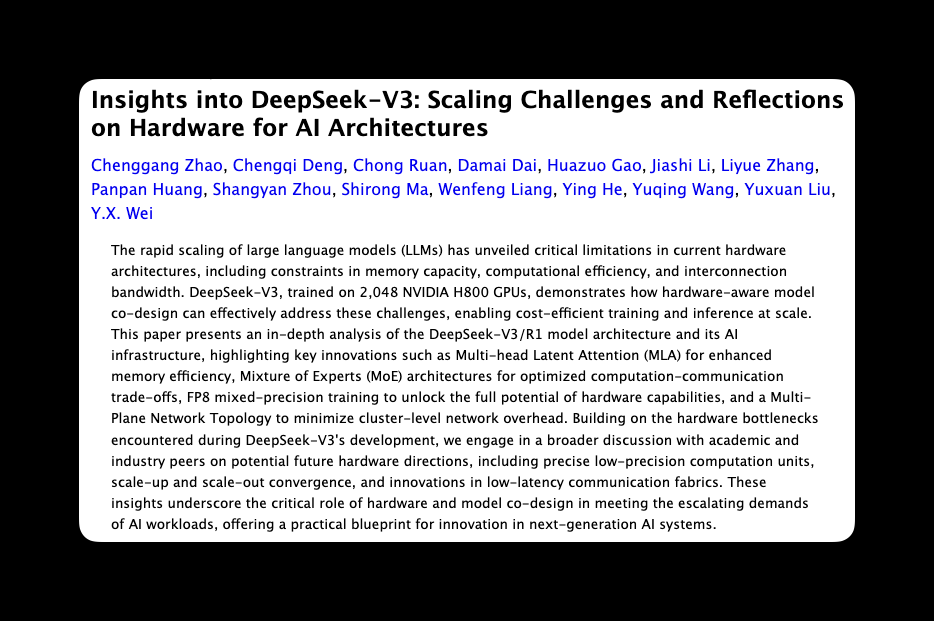

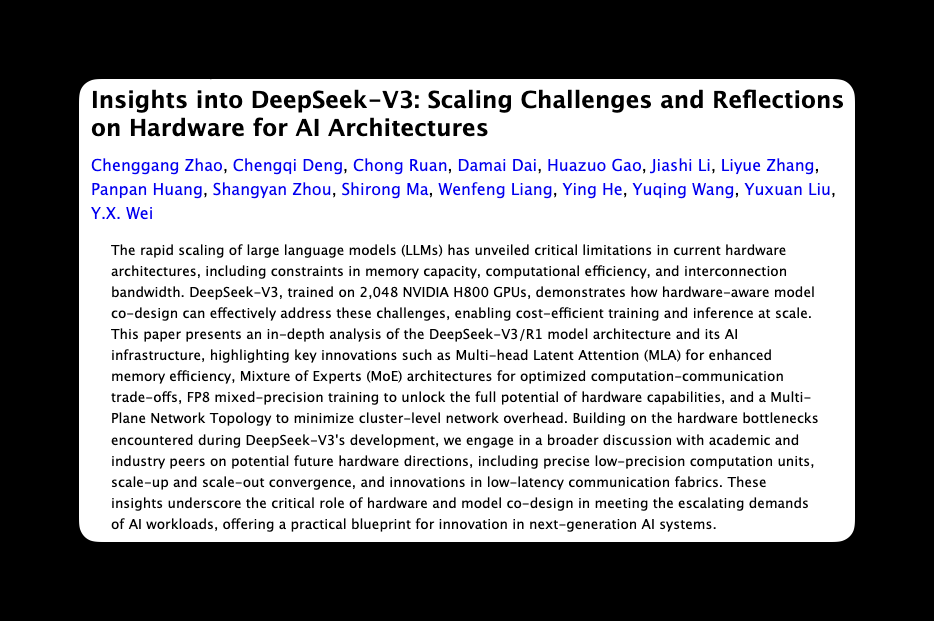

The DeepSeek team releases info on scaling challenges for DeepSeek-V3

The DeepSeek-V3 scientists released a paper detailing the difficulties of scaling AI due to the limitations of hardware and infrastructure and the innovations they used to circumvent it. This is particularly interesting because it’s an explanation of the intersection of AI and great engineering.

This is a great read to understand what makes machine learning infrastructure a difficult task and why engineers that understand it are so in-demand and paid so well. But maybe I’m a bit biased here because this is very close to what I work on at Google.

Open LLMs are free right? Not quite.

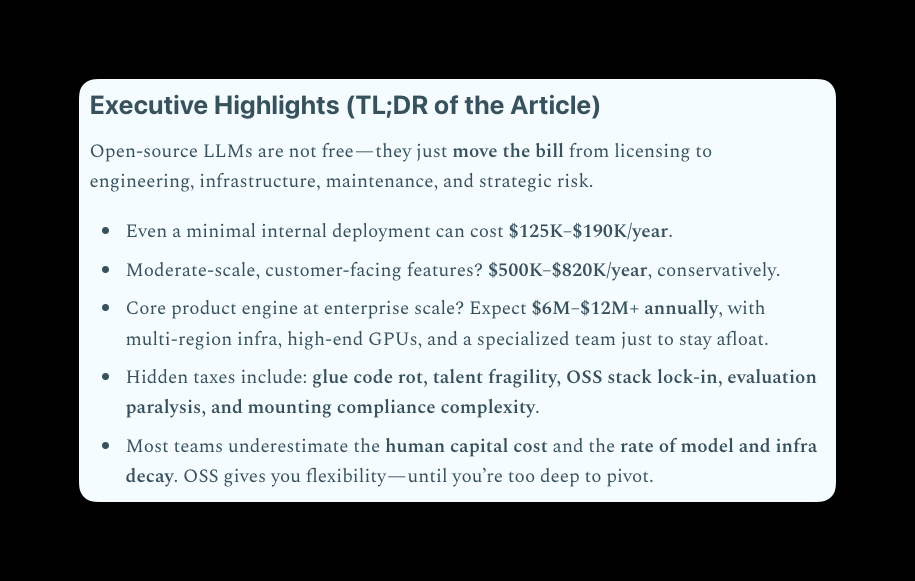

Lastly, everyone wants AI to be open source, but no one truly understands what that entails. The “open LLMs are free” argument is everywhere, but it’s simply not true. As an engineer working on AI, this is something you must understand or you won’t be successful.

breaks it down in his most recent article and I highly recommend you read at least the Executive Highlights he’s included at the top of the article. One huge takeaway from his article:Open-source LLMs are not free — they just move the bill from licensing to engineering, infrastructure, maintenance, and strategic risk.

Guess who’s in charge of the engineering, infrastructure, and maintenance? You’re reading

, so that should give you a hint. This is all gets passed on to you.This is great read, check it out:

More interesting things

I found the below Twitter post especially relevant to today’s article. As soon as tech companies view success as meeting expectations defined via processes, innovation greatly slows.

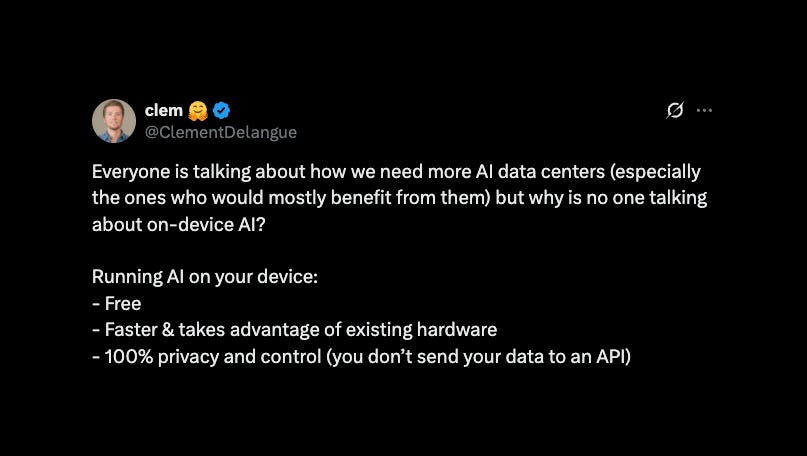

On-device AI will be the next big step in democratizing AI usage for all. This isn’t spoken about nearly as much as I thought it would be. Right now, companies expect consumers to pay subscriptions for using their servers for AI tasks. Simultaneously, companies are releasing beefy consumer hardware.

Getting AI running on the hardware consumers already have is the best way to ensure it’s accessible (and affordable!) for all. People don’t realize that pushing state-of-the-art AI performance and reducing state-of-the-art AI size are equally monumental advancements.

In case you missed, I wrote an article about showing instead of telling and how it’s not only the best way to explain complex machine learning and scientific topics, but it’s also the best way to communicate in general.

One of my goals in the near future is to get better at it and I urge you to do the same:

That’s all for this week! I’m a bit light on the interesting content this week because I spent much less time online, but I’ll be back with more next week.

Always be (machine) learning,

Logan

Nice list of reading!

That real cost about open source LLM is really interesting. I was planning on running LLM locally myself, will definitely consider those costs before going too far.

Thanks for the shoutout, Logan!