Welcome to machine learning for software engineers. Each week, I share a lesson in AI from the past week, five must-read resources to help you become a better engineer, and other interesting developments. All content is geared towards software engineers and those that like to build things.

Subscribe to get these emails directly in your inbox.

This week felt like a watershed moment for AI development. GPT-5 launched with impressive capabilities, but the improvements were incremental rather than revolutionary. Multiple open-source models dropped simultaneously. Coding agents matured significantly. Beneath all these announcements the vibe shifted.

The era of "just scale it bigger" appears to be ending.

For the past four years, AI progress followed a simple formula: more data + more compute + bigger models = better performance. This scaling approach delivered breakthroughs. We saw this with GPT, Gemini, Claude, Llama, and many other models.

But GPT-5's release signals we're hitting the limits of pure scaling (think throwing money, compute, and time at models). The performance gains, while solid, aren't the massive leaps we've seen in the past. Granted, it's likely if we had near unlimited compute and trained these models they'd still see significant progress, but that will require leaps and bounds of progress in hardware capabilities.

The next phase of AI development will be won through smart engineering, not bigger budgets. This is why AI needs software engineers and signals three key engineering opportunities in AI right now and in the future:

System integration becomes a key differentiator. When raw model performance plateaus, how well AI integrates with existing workflows, databases, and user interfaces dictates its real-world usefulness and becomes the competitive advantage. We've heard it time-and-time again that the application layer is king and that's where software engineers shine.

Creative engineering solutions win over brute force approaches. History shows that when pure scaling hits limits, innovative engineering breakthroughs emerge. Most recently this was evident via inference-time scaling (reasoning). When pure training scaling hit a wall, creative engineering let us find a way to continue to scale. This will continue to be the case.

Efficiency and optimization become core competencies. With diminishing returns from scaling, making existing models faster, cheaper, and more reliable becomes essential to improving their real-world applicability. Making models smaller and more efficient is fundamentally a software engineering problem that will need to be solved.

The companies that lead the next wave of AI aren't those with the largest budgets and biggest models. It's those able to solve core software engineering complexities and apply models to real-world scenarios.

What other opportunities do you see emerging as the pure scaling era ends?

If you missed last week's ML for SWEs, you can catch it here:

We learned about world models and the key role they play in scaling AI agents. Check it out and enjoy the resources below!

Must-reads

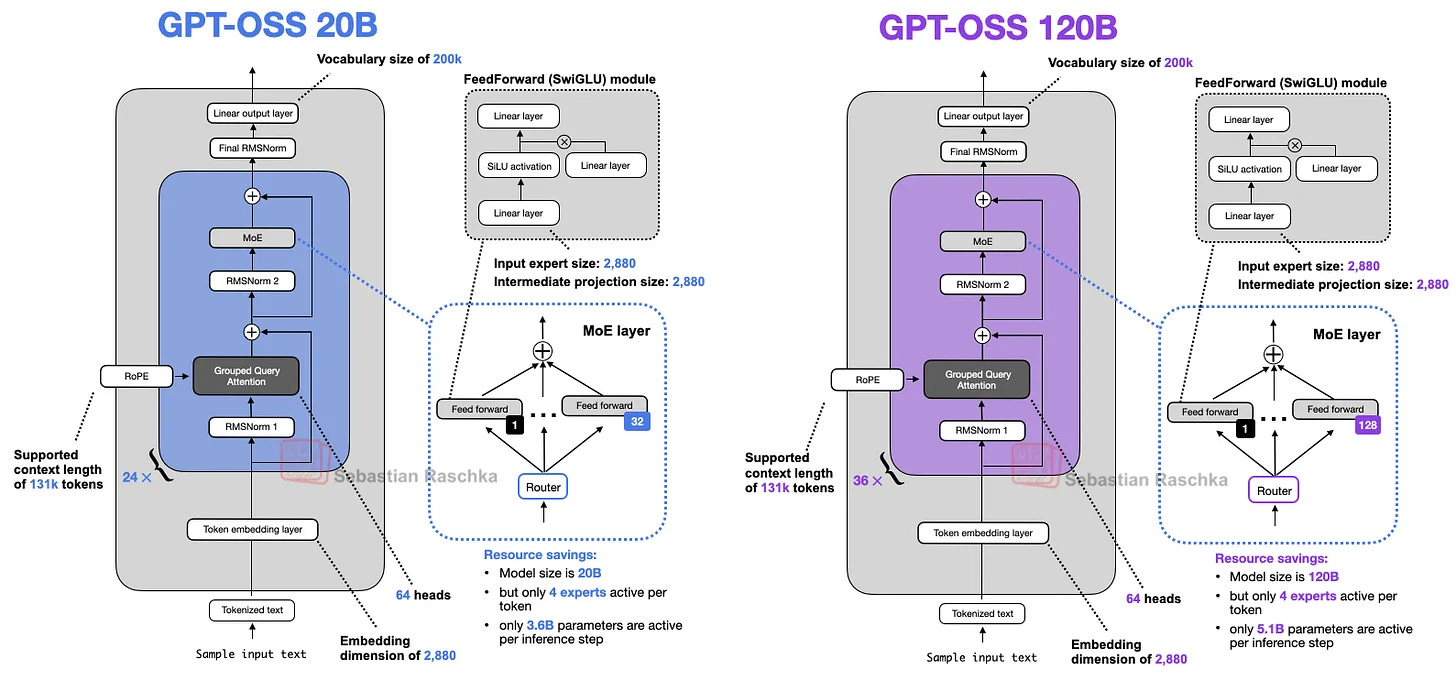

GPT-OSS vs. Qwen3 and a detailed look how things evolved since GPT-2 by

- A comprehensive analysis of OpenAI's first open-weight models since GPT-2. Essential reading for understanding how transformer architectures have evolved and what makes these new models significant for local deployment.The current state of LLM-driven development - A practical guide to integrating LLMs into coding workflows. Covers what works, what doesn't, and how to build effective AI-assisted development practices. Particularly valuable for understanding agent tools and when to use them.

A better path to pruning large language models - Amazon's research on "Prune Gently, Taste Often" shows how to compress 7B parameter models in under 10 minutes on a single GPU with 32% performance improvement. Critical technique as efficiency becomes more important than raw size.

GPT-5: Key characteristics, pricing and model card by

- Simon Willison's detailed technical analysis of GPT-5's hybrid architecture, pricing structure, and system card. Essential reading for understanding how GPT-5 operates as a multi-model system with different underlying components for different tasks.Engineering.fyi – Search across tech engineering blogs in one place - Centralized search across major tech engineering blogs. Valuable resource for engineers to discover technical content and implementation patterns from companies like Google, Netflix, Uber, and other major tech organizations.

Other interesting things this week

Product Launches

Claude Opus 4.1 - Achieves 74.5% coding performance on SWE-bench Verified, major upgrade for real-world coding tasks.

Jules, our asynchronous coding agent - Google's Gemini 2.5 Pro-powered coding agent goes public after generating 140,000+ public code improvements in beta.

Create personal illustrated storybooks in the Gemini app - Gemini generates 10-page books with custom art and audio in 45+ languages.

We're testing a new, AI-powered Google Finance - Google Finance reimagined with AI offers comprehensive responses for financial questions and includes advanced charting tools with live news feed.

AI Developments

Amazon builds first foundation model for multirobot coordination - DeepFleet increases deployment efficiency by 10% using millions of hours of fulfillment center data.

The latest AI news we announced in July - Google expanded access to AI tools including AI Mode in Search, creative tools in Google Photos, and personalized shopping experiences.

How AI is helping advance the science of bioacoustics to save endangered species - Updated Perch AI model aids conservation with improved bird species predictions and adaptation to new and underwater environments.

Diffusion language models are super data learners - Research on diffusion language models and their data learning capabilities.

Technical Tools

Claude Code IDE integration for Emacs - Native integration with Claude Code CLI through Model Context Protocol with automatic project detection and tool support.

Introducing Open SWE: An Open-Source Asynchronous Coding Agent - LangChain's fully autonomous coding agent with demo and documentation available.

Launch HN: Halluminate (YC S25) – Simulating the internet to train computer use - Building Westworld, a fully-simulated internet for training computer use agents with synthetic versions of common applications.

Things that helped me get out of the AI 10x engineer imposter syndrome - Addresses the psychological challenges of AI-enhanced productivity claims and provides practical advice for engineers navigating the hype.

Research & Analysis

How a Research Lab Made Entirely of LLM Agents Developed Molecules That Can Block a Virus - Stanford/Chan Zuckerberg Biohub's Virtual Lab uses GPT-4o agents to develop experimentally validated nanobodies targeting SARS-CoV-2.

The Roadmap of Mathematics for Machine Learning by

- Comprehensive guide to linear algebra, calculus, and probability theory fundamentals.- - Comprehensive analysis covering GPT-5's three models (GPT-5, GPT-5-mini, GPT-5-nano) and the multipart system with fast model, deeper reasoning, and real-time router.

Using AI to Augment, Not Automate Your Writing by

- Framework for implementing AI assistance in writing workflows while maintaining human creativity and avoiding over-automation.How to Instantly Render Real-World Scenes in Interactive Simulation - NVIDIA's NuRec and 3DGUT reconstruct photorealistic 3D scenes from sensor data for deployment in simulation environments.

Industry Analysis

GitHub is no longer independent at Microsoft after CEO resignation - GitHub leadership now reports directly to Microsoft's CoreAI group following CEO departure.

Inside Tim Cook's push to get Apple back in the AI race - Apple Intelligence features won't reach most users until 2025-2026 while competitors ship AI features widely.

Alan Turing Institute: Humanities are key to the future of AI - New initiative "Doing AI Differently" advocates for human-centered approach to AI development.

Infrastructure & Energy

NVIDIA latest: Blackwell GPU and software updates - RTX PRO 6000 Blackwell Server Edition offers 45x better performance and 18x higher energy efficiency compared to CPU-only systems.

Security & Concerns

My Lethal Trifecta talk at the Bay Area AI Security Meetup - Simon Willison covers prompt injection vulnerabilities and challenges in securing systems using Model Context Protocol.

Community highlights

This section is coming soon! I want to highlight more of what you are all doing whether it's building, writing, teaching, or more.

The jobs section will move to its own post and remain in the Discord server feed for paid members. I've been struggling to find a way to share it effectively and I've come to the conclusion that this is the right choice.

If you found this helpful, consider supporting ML for SWEs by becoming a paid subscriber.

Always be (machine) learning,

Logan