ML for SWEs 68: 43 Years Later, Developer Productivity Is Still a Billion-Dollar Problem

43 years later and we still can't measure developer productivity

Welcome to Machine Learning for Software Engineers!

This newsletter helps software engineers understand machine learning, artificial intelligence, and the industry as a whole. We focus on its impact, how to use it, and how to build systems with it.

Each week I share a recap of must-read resources, current events, hiring updates, AI funding, and more. If that interests you, consider becoming a paid subscriber to support the newsletter.

We’re close to bestseller status, and until we reach it, subscribers can lock in a price of just $10 a year. Remember that many companies cover learning resources like this, so check if yours will sponsor your subscription.

I’d also love your feedback on this week’s new format. The goal is to share more, keep things timely, and make the roundup more valuable for you.

One of the biggest things in business is being able to gather metrics and analyze data to understand if large-scale investments are worth it for a company in the long run.

This has been a huge topic of discussion in the software industry for the past few weeks. Companies are spending billions of dollars on AI and thousands of hours integrating it into software development workflows, but they’re quickly realizing how difficult it is to quantify AI’s impact.

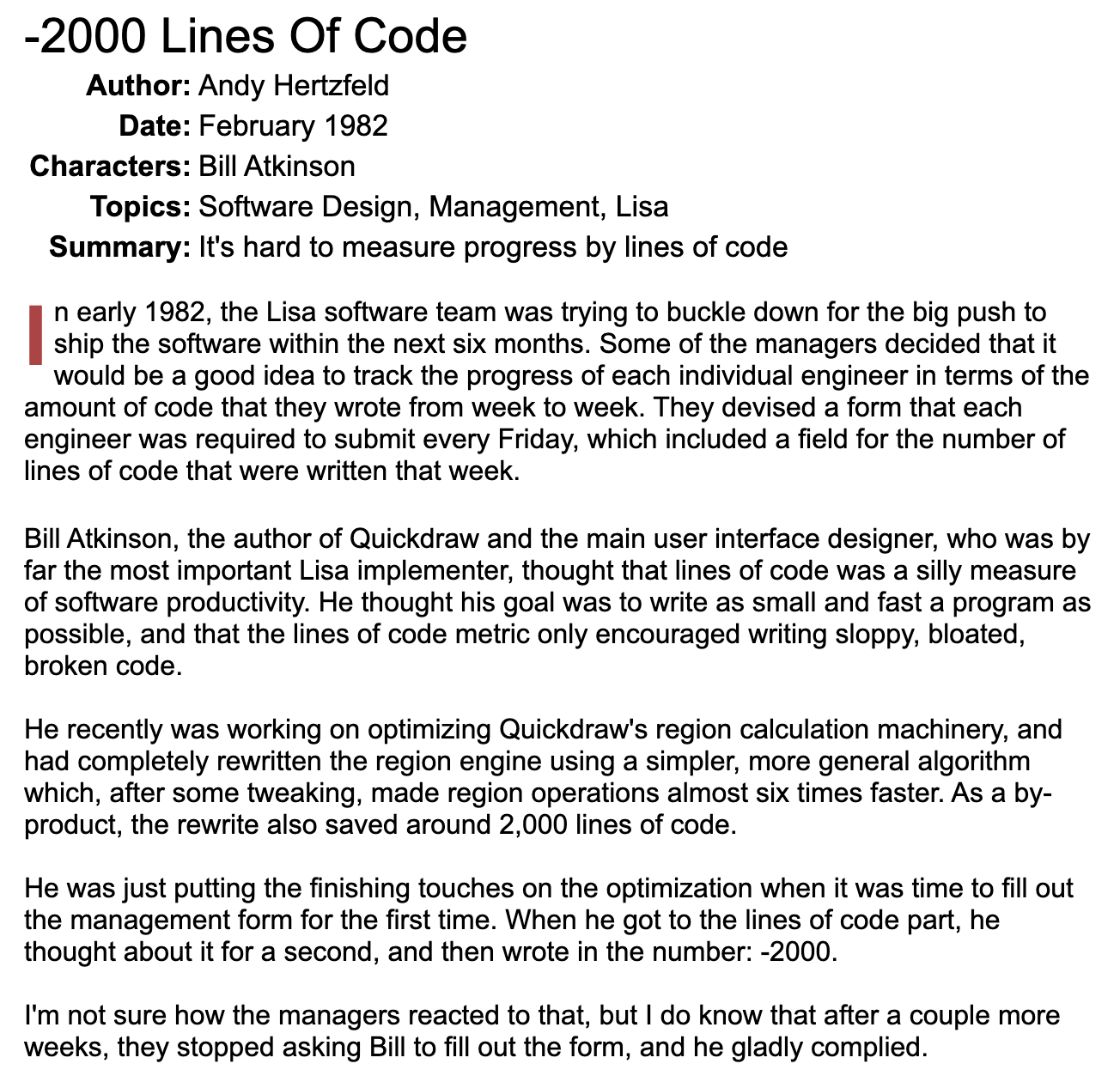

To understand this, here’s some important software engineering lore:

Why do I bring this up? It’s been 43 years and we still don’t have a definitive approach for measuring software development productivity.

The problem is that companies are investing heavily in productivity without a reliable way to measure whether they’re receiving a return on that investment. So every company is tasking their employees with figuring this out.

It seems like it should be a simple problem, but we don’t actually know what good metrics for measuring developer productivity are.

Every software engineer knows that commonly collected metrics such as lines of code written, tickets completed, and documents contributed to are bad productivity metrics.

One could argue that it can be measured by the output of a developer’s work, but even that is difficult to quantify. That’s why so many companies have grueling promotion processes where managers will sit in meetings for hours or days on end to debate the impact of an individual’s work.

I’ve seen many measurements of developer productivity, but none have been definitive. End-to-end work time might be a good bet, but there are many confounding variables that contribute to that measurement (the type of task, the tools required, the potential interruptions, etc.).

Adding the layer of AI-assisted work versus non-AI work makes these measurements even more complicated and ambiguous. There are many types of “AI use” for developers (chatbots for questions, summarization tools, coding agents, CLI agents, etc.), so how do we slice by these and how do we view metrics in aggregate?

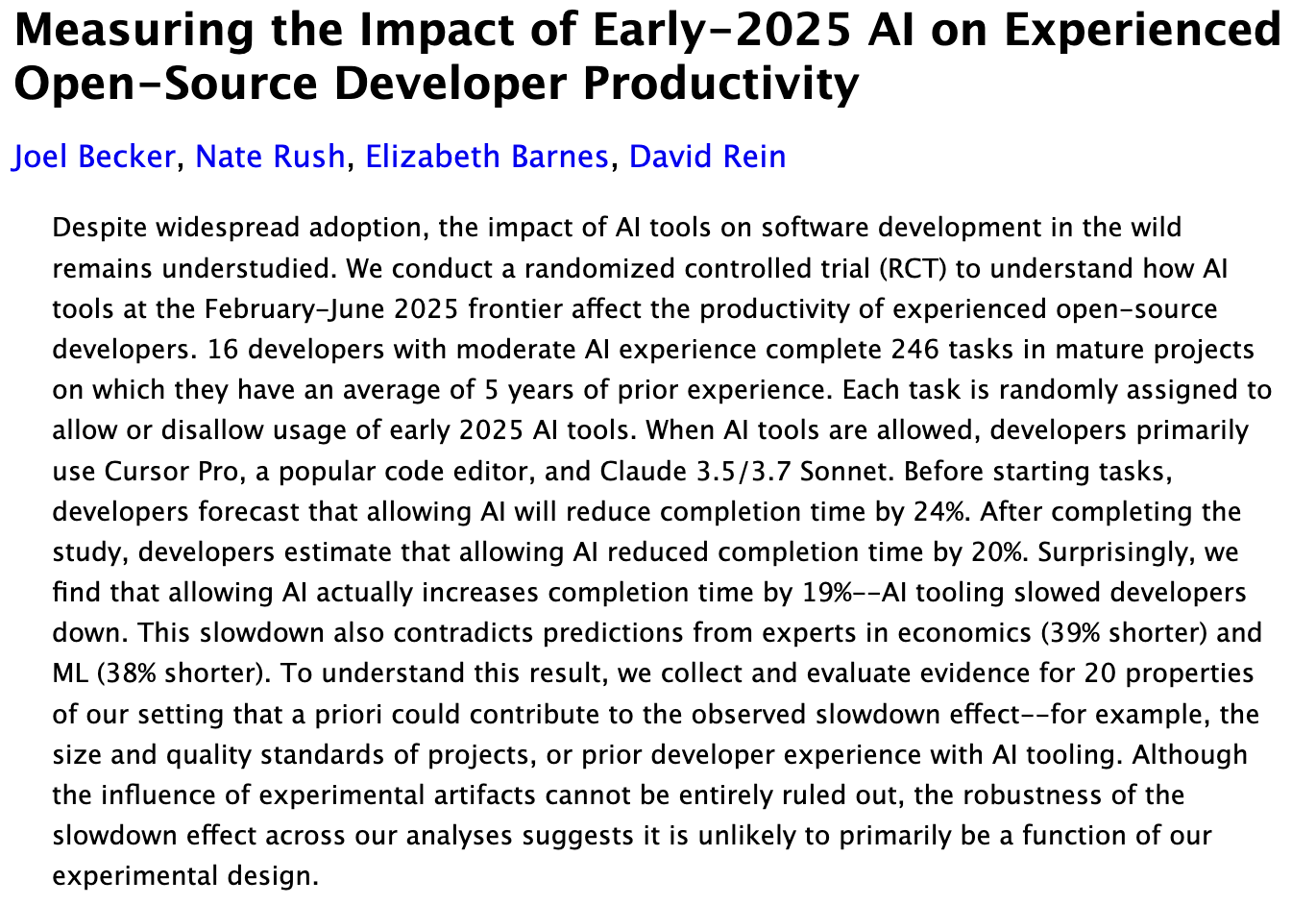

Solving this problem is in extremely high demand. The general sentiment (largely driven by clicks and engagement) is that AI can fully replace developers, but studies suggest AI can sometimes make developers slower even when they think it’s making them faster.

To add more complexity, any developer who has used an AI tool can recognize its potential, and most have been aided by it on certain tasks. But the feedback I’ve gotten from senior developers is that many tasks take too much coaching for the AI to handle and would have been faster to do themselves.

The important takeaway is: Developer productivity has been at the cutting edge of software engineering research for 43 years. Now billions of dollars are being sunk into it with no reliable way to measure a return on that investment.

We also can’t truly say whether AI is, in aggregate, helpful or harmful for software development.

As someone who works in AI developer tools, they’re undoubtedly the future, but they need to be done right. It’s a difficult space with many interesting problems to solve and that’s why I enjoy working on it so much.

I’d love to hear your thoughts: has AI genuinely made you more productive, or has it slowed you down?

In case you missed the weekly ML for SWEs from two weeks ago, we discussed AI creating a 3-day work week. You can find that here:

Must Reads

Deep Learning Focus — REINFORCE: Easy Online RL for LLMs — Why simple policy-gradient methods (REINFORCE/RLOO) can rival PPO for LLM training without orchestration complexity or instability, making RLHF/RLAIF workflows easier to adopt in engineering practice. Source: Deep Learning Focus by

NLP Newsletter — Top AI Papers of the Week — Summaries of Meta’s ARE simulator and Gaia2 benchmark, ATOKEN unified multimodal tokenization, and Code World Model. A compact survey of the most relevant research progress. Source: NLP Newsletter by

Artificial Intelligence Made Simple — Weekly AI Updates Recap — Covers the most important AI announcements of the week, including model releases, regulatory developments, and ecosystem shifts, with thoughtful framing for engineers. Source: Artificial Intelligence Made Simple by

Artificial Ignorance — Weekly AI Roundup — Sharp commentary on major releases, infra announcements, and platform features, filtering hype and highlighting what actually matters for builders. Source: Artificial Ignorance by

LLM Quantization — What engineers actually need — Practical guidance on PTQ vs QAT, INT8/INT4 trade-offs, calibration strategies, and toolchains (GPTQ, AWQ, bitsandbytes, GGUF) for deploying LLMs in production. Source: Full Stack Agents by

Product and Industry

Claude Sonnet 4.5 charts — SWE-bench Verified at 82% and “computer use” workflows that push agentic coding forward. Source: Department of Product

OpenAI: in-chat purchasing (ACP) — “Buy It in ChatGPT” via the Agentic Commerce Protocol with Stripe & Shopify. Source: OpenAI

OpenAI on OpenAI — How they use models for support, GTM, and inbound conversions; lessons for teams. Sources: Support, GTM, Inbound, Internal playbook

Gemini Robotics 1.5 — Long-horizon embodied agents that perceive, plan, use tools, and act. Source: Google DeepMind Blog

Perplexity launches Search APIs — New APIs for embedding Perplexity’s search and Q&A into other applications. Source: Department of Product

Research

REINFORCE for LLMs — Practical online RL alternatives to PPO for LLM alignment. Source: Deep Learning Focus

This week’s top papers — Agents, multimodal tokenization, and code-as-environment. Source: NLP Newsletter

Localized adversarial attacks — Systematic benchmarks of targeted, imperceptible noise. Source: arXiv:2509.22710

In-context continual learning — Evidence that ICL can accumulate cross-task knowledge over time. Source: arXiv:2509.22764

Communication-efficient, interoperable distributed learning — Heterogeneous models with a shared fusion-layer interface. Source: arXiv:2509.22823

On the capacity of self-attention — Theoretical limits on information storage/propagation. Source: arXiv:2509.22840

Engineering and Tools

LLM quantization guide — PTQ/QAT, INT8/INT4, calibration, and production trade-offs. Source: Full Stack Agents

Claude Code 2.0 — npm package for more autonomous code assistance. Source: npmjs.com

FastMCP — Practical path to build Model Context Protocol servers without the boilerplate. Source: Technically

Learning Resources

Why models hallucinate — Causes, failure modes, and mitigation levers engineers can apply. Source: Technically

Funding

Cerebras Systems — $1.1B Series G at $8.1B — Fidelity & Atreides led; 1789 Capital, Alpha Wave, Altimeter, Benchmark, Tiger Global, Valor joined. Source: Axios

Rebellions — $250M Series C at $1.4B — Investors include Arm Holdings, Samsung Ventures, Pegatron, Lion X Ventures, KDB, Korelya. Source: Axios

Eve — $103M Series B (legal AI for plaintiff firms) — Led by Spark Capital; a16z, Lightspeed, Menlo. Source: Axios

Zania — $18M Series A (agentic AI security) — Led by NEA; Palm Drive Capital, Anthology Fund. Source: Axios

Alex — $17M Series A (AI recruiter) — Led by Peak XV; YC, Uncorrelated. Source: Axios

Augmented Industries — €4.5M pre-seed (industrial AI) — Led by b2venture. Source: Axios

Who’s Hiring

OpenAI — Software Engineer (Seattle, WA) Source: LinkedIn

Turing — Senior Software Engineer (Remote, US) Source: LinkedIn

Google — SWE Early Career, Campus 2026 (Pittsburgh, PA) Source: LinkedIn

Google — SWE Early Career, Campus 2026 (New York, NY) Source: LinkedIn

NVIDIA — Sr SWE, Data Infra for Robotics Research (US) Source: LinkedIn

Microsoft — Senior Software Engineer (US) Source: LinkedIn

Microsoft — Senior Software Engineer (Redmond, WA) Source: LinkedIn

Toast — Senior Software Engineer (US) Source: LinkedIn

Netflix — ML Engineer 5, Content & Studio (US) Source: LinkedIn

Yahoo — Machine Learning Engineer (US) Source: LinkedIn

Pinterest — Machine Learning Engineer (US) Source: LinkedIn

Mercor — Senior Machine Learning Engineer (Remote, up to $150/hr) Source: LinkedIn

Safety and Ethics

California SB 53 signed — Transparency reports and incident reporting required for AI firms operating in CA. Source: Office of Governor Newsom

Thanks for reading!

Always be (machine) learning,

Logan

> The general sentiment (largely driven by clicks and engagement) is that AI can fully replace developers

I push back on the idea that that’s the general sentiment. Or that there were sentiments that AGI was around the corner until GPT-5.

I just do not believe there are serious, professional people who truly believe AI has the ability to fully replace developers.

Linkedin and even substack can be a place of hyperboles for engagement farming.

I see that recently, the opposite has been happening, where people proclaim AGI boosters and job replacement believers are wrong! As if there are plenty of true AGI boosters and job replacement believers to begin with…